|

Adoption by policy makers of knowledge from educational research: An alternative perspective

Chris Brown

Institute of Education, University of London

The phrase knowledge adoption refers to the ways in which policymakers take up and use evidence. Whilst frameworks and models have been put forward to explain knowledge adoption activity, this paper argues that current approaches are flawed and do not address the complexities affecting the successful realisation of knowledge-adoption efforts. Within the paper, existing models are engaged with and critiqued, and an alternative perspective presented. It is argued that this new take on knowledge adoption provides a more effective account of the process. The paper also illustrates how this model has been tested and what its implications are for educational policy development.

Extant thinking as to how the KA process might be expressed or most effectively undertaken are set out in a number of frameworks or models. This paper argues, however, that these models fail to address a number of issues that are central to any fundamental conceptualisation of KA or to its successful realisation. For example; that, individually, such models fail to capture fully the complexities of the KA process, that there is no satisfactory over-arching theory that accounts effectively for the process of research adoption and how it might be improved, that existing models fail to reflect the social nature of KA or the motivations of social actors to engage in such activity, that the models proposed to date do not differentiate between the varying contexts that researchers may find themselves in. Extant models also fail to explicitly differentiate between the myriad of analytical levels at which KA operates. Finally, it is argued that current models omit to differentiate between instrumental and conceptual uses of knowledge.

The aims of this paper, therefore, are to; describe existing models of KA and demonstrate how such models have been substantially critiqued, to illustrate how this critique has necessitated the development of a new model of KA and how this model was derived from a configurative systematic review of existing literature, to show how this model was tested empirically, and to illustrate the implications of the model for the notion of evidence-informed policy making more widely. This paper is derived from a project undertaken between 2009 and 2011. Its focus was: i) to review existing conceptualisations of KA and, in particular, to examine explanatory models of how evidence feeds into the policy making process (specifically with regard to the education sector in England and Wales); and ii) to put forward suggestions for how KA processes might be effectively implemented by researchers, with a view to increasing the use of evidence within policy making.

The notion that pull and push alone could account for the adoption of knowledge was problematised, however, both by the conceptualisation of the Enlightenment Model (Weiss, 1998) and through the development of the Two Communities Model (Amara, Ouimet & Landry, 2004). Within the Enlightenment Model, for example, KA was conceived not as a consequence of the findings of a single study or a body of knowledge but from the percolation of evidence into the policy-making domain, causing policy-makers to think differently about particular issues over a period of time. The Two Communities Model, meanwhile, assumed that a cultural gap exists between policy-makers and practitioners on one hand, and academic researchers on the other. As a consequence, the model advanced the notion that a lack of understanding exists between these 'two communities', leading to low levels of communication (and so KA) between them. Mitton et al. (2007) observe that, as a result of the issues raised by both the Enlightenment and Two Communities Models, later conceptualisations of KA were grounded in the idea that the successful adoption of knowledge requires lengthy interaction rather than one-way conversation. Likewise, Nutley et al. (2007) posit that the findings of research do not 'speak for themselves'; they are interpreted and that this happens best through dialogue and engagement. As a result, models such as the Interaction/Communication and Feedback Model (Dunn, 1980; Yin and Moore, 1988; Nyden and Wiewel, 1992; Oh, 1997; Nutley, Davies, & Walter, 2002; Amara, et al., 2004) and the Linkage and Exchange Model (Lavis, Lomas, Hamid & Sewankambo, 2006) were developed to explain KA as a dynamic, two-way process.

At the same time other co-dependent or complementary models, developed in parallel, began to focus on individual aspects of the adoption process. For instance, the Organisational Interests Model (Amara et al., 2004) frames the argument that the size of organisations, their structures, the nature of their responsibilities and their needs may affect the propensity of professionals working within them to adopt and utilise or under-utilise research. The Engineering Model (Amara et al., 2004) suggests that the effective adoption of research depends on the characteristics of the research findings. These include content attributes (such as compatibility, complexity, observability, trialability, validity, reliability applicability, etc.) and the type of research (basic-theoretical/applied, general/abstract, quantitative/qualitative, particular/concrete, and research domains and disciplines). Best and Holmes (2010), meanwhile, argue that four interconnecting factors: evidence and knowledge, leadership, networks, and communications may best account for how knowledge is turned into action, and that these warrant further exploration.

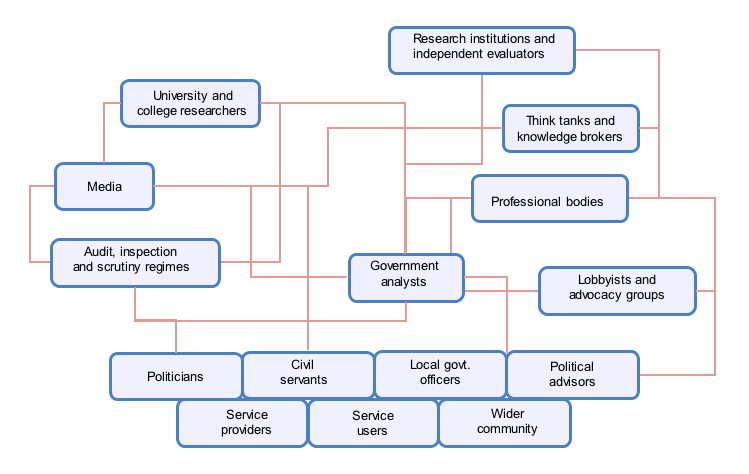

Rickinson, Sebba and Edwards (2011, p.80), reporting on the seminar series they held on user engagement, also outline a model of KA proposed at the seminars by Nutley. This is depicted in Figure 1, and identifies policymakers as politicians, civil servants and political advisors. It also proposes a range of both intermediaries and brokers through which research findings are translated before reaching those policymakers. Such intermediaries, Rickinson et al. note, mean that any translation is also filtered through or slanted by the perspectives of those involved and so made sympathetic to their particular viewpoint(s).

|

These models have also been subject to substantive critique. For example, the explanatory power of a number of them was tested empirically by Landry, Amara and Lamari (2003) in a survey of 833 Canadian government officials. Landry et al. concluded that whilst more interactive factors appear to best explain research adoption [2], overall, the process is far more complex than these existing models might suggest. Estabrooks, Thompson, Lovely, and Hofmeyer too argue that there is currently no satisfactory over-arching theory to explain effective research adoption, with most models tending to focus on "explanation rather than prescription" (2006, p.26). These sentiments echo the work of Wingens (1990) who describes the explanatory power of KA models as 'mediocre' while Cooper, Levin and Campbell (2009) argue that they are conceptually inadequate and fail to reflect the idea that knowledge use is a social process. Finally, Mitton et al. (2007, p.756) note that "there is very little evidence that can adequately inform what [KA] strategies work in what contexts".

A second point of critique is that current models do not explicitly differentiate between the myriad of analytical levels at which KA operates at or is affected. These include that of the individual policy maker/researcher, of groups or organisations, or at the level of society more broadly. For example, in Nutley's conceptualisation of knowledge transfer (Figure 1, above), it is not clear whether references are made to individual policy makers, government departments, or to both. The same is also true for the other constituent parts of the model. Are references made to individual researchers or universities? Are intermediaries conceptualised as organisations or individual policy actors? This distinction is important, however, because at these different analytical levels, very different factors of influence are likely to come into play. Such factors will range in nature from the specific actions that might be undertaken by researchers and policy makers (as individual communicators of, or audiences for research), to issues of power relations which operate at more macro levels (Foucault, 1980).

Finally, current models of KA fail to differentiate between instrumental and conceptual uses of knowledge. That is, they fail to differentiate between the factors that concern whether policy makers will digest and consider knowledge (their conceptual use of evidence) and those factors which will impact on the actual creation of policy (the instrumental use of evidence). This differential is important since, in a complex, policy-making environment, solely considering conceptual uses of knowledge is likely to lead to researchers developing fundamentally different strategies for KA than those that might affect instrumental (or actual) use. For example, researchers seeking to further conceptual knowledge use might concentrate their efforts on how their research outputs are communicated. Enhancing instrumental use, on the other hand, may involve researchers spelling out to policy makers how given research can be used to improve a particular policy area.

Set out below is a brief summary of these internal and external factors. More detail about each may be found in Brown (2011). To begin with, the internal factors affecting KA are as follows.

The term 'privileged' researcher was introduced in Brown (2011) to describe any knowledge producer who can quickly and easily access policy makers (either because they work with or are favoured by them) and so encompasses a range of policy actors; for example, government or 'insider' researchers (Brown, 2009), or (previously privileged before 2010) those identified by Ball (2008, p.104) as the "intellectuals of new labour". As a result, it is argued that a second contextualising factor is the strength and nature of the relationship between researchers and policy makers, recognising that this changes over time (Stronach & MacLure, 1997; Rich, 2005; Cohn, 2006; Davies, 2006; Ball, 2008; Exley, 2008; Ball & Exley, 2010). Thus, researchers with strong, possibly ideologically-related ties to policy makers may have certain perceived organisational or sector level salience and so more chance of gaining access to, and having their research considered by, policy makers than those who do not. Whilst related to a number of the external factors above, this contextualising factor can be, and is, differentiated from them. In part this is due to the different relationships it is possible for researchers to have with policy makers; for instance, a researcher may simply be a provider of a contracted piece of research, won via tender process. Alternatively, they may be a trusted advisor and ideological advocate, or openly sympathetic to the government. They may even be the friend of the policy maker concerned. Thus, a researcher may be credible and respected (a vital external factor) but may not have a 'carte blanche' to discuss all and any policy ideas with policy makers. Likewise, there may be in place project related structures which enable researchers to access policy makers with regard to specific findings, but on other topics or areas of research, these same researchers may not have recourse to approach policy makers directly or have their findings treated in the same way.

A third conceptualising factor also emerged but does not explicitly form part of the model detailed in Figure 2 below. This third factor considers the nature of the relationship that is required between policy makers and researchers in order that KA activity might flourish. The main gist of this factor is that successful KA is dependent upon positive actions/strategies being employed by both narrators of, and audiences for, research outputs. In other words, the successful adoption of knowledge requires partnership working between researchers and policy makers, with each being required to play their part in negotiating the internal/external factors set out above. This factor is typified, for example, by the work of Dowling (2005; 2007; 2008; 2008a) who argues that the key phenomena of interest in the social world are the relationships between social actors. In particular, Dowling argues that the strategies employed by social actors will be invariably geared towards developing partnerships with others or towards preventing their occurrence. This third factor then provides the motivating sociological driver of KA. Building on the work of Dowling, KA is only likely to occur when both researcher and policy maker are actively seeking to engage with one another. This requirement for a combined positive effort, however, also removes the need to further consider this contextualising factor. KA occurs as the result of attempts to establish relationships, as such, any model or conceptualisation of KA can only be based upon the consideration of positive rather than negative actions (where, in the case of the latter, KA cannot be realised).

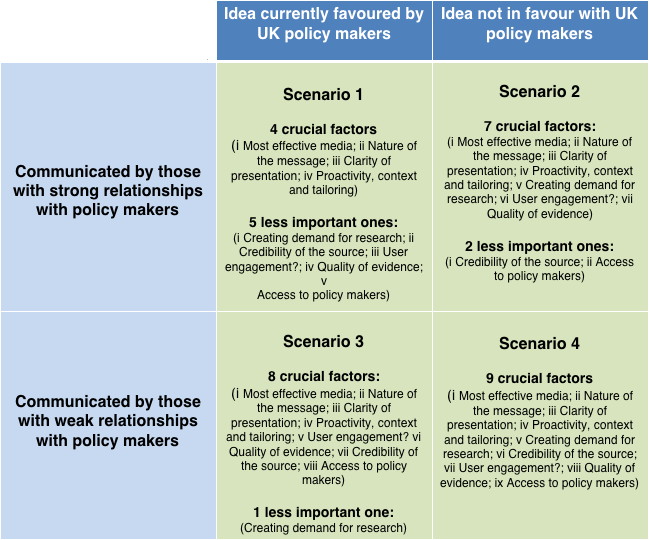

It is argued that the complexity of the KA process will vary with each of these four scenarios. This complexity is expressed by differentiating, within each scenario, between those internal and external factors that may be considered crucial to the process and those which are less important. For example, it has been shown that policy makers are most likely to be receptive to research where the underpinning idea is in favour. The crucial factors for a researcher with strong relationships with policy makers to consider in disseminating such research, therefore, are those internal factors associated with its effective communication. The other KA factors detailed above, whilst relevant, are less important because they have been pre-negotiated or dealt with by dint of the researcher/research's position viz-a-viz the contextualising factors (this set out in Figure 2 as Scenario 1). The diametrically opposite position (Scenario 4) is considered to be where a researcher with a weak relationship with policy makers is attempting to disseminate knowledge to policy makers where the underpinning research does not relate to ideas currently in favour. Here, as well as the internal factors associated with effective communication, the researcher also has to consider relevant external factors controlled by policy makers; how to situate evidence in order to create a demand for it, how the perceived credibility of the source can be maximised, whether the audience has been engaged in policy networks or other forms of user engagement, how to demonstrate or account for the quality of the evidence, and how to gain access to policy makers. As a result, it is argued that the process of researchers with strong ties to policy makers and disseminating favoured research to them may be considered far less difficult than processes associated with a weakly connected researcher attempting to inject unfavoured ideas into the policy-making process. In addition, intermediate positions also exist. These scenarios and strategies are set out in full in Figure 2 below:

Figure 2: Factors that affect the adoption of research

It is argued that the model set out in Figure 2 significantly improves upon the way in which the KA process is currently conceived. For example, combining the notion that KA is dependent upon researchers and policy seeking to form partnerships, with the idea that the effective adoption of research is a function of factors which are internal, external and contextualising, provides the 'why' which, up until now, has been missing. Whereas, existing models, such as Demand pull or that presented by Nutley in Figure 1, represent knowledge as something adopted/transferred/exchanged through chains or flows; and via mechanics, it can be claimed that this type of representation is concerned solely with process. The model illustrated in Figure 2, on the other hand, represents a different conceptualisation; that knowledge flows can only come into being if policy makers and researchers are motivated to form a relationship with one another and, simultaneously, that their actions must be directed towards achieving KA/overcoming barriers to achieving the adoption of knowledge as an end goal.

The 'how' of KA is also further developed. In representing KA as a function of both internal and external factors, the model illustrates the hurdles that researchers will need to develop successful strategies to negotiate if they are to communicate effectively or disseminate evidence to policy makers, and vice versa for policy makers attempting to act as effective audiences. At the same time, Figure 2 illustrates, should they wish to develop policy without being encumbered with what might be viewed as inconvenient research messages, the ways through which policy makers can seek to undermine any value research evidence might provide. For example, interview data in Brown (2011) revealed that policy makers often promote a 'deficit' model of research; that is, the view that it is researchers alone who are responsible to the failure of any actualisation of evidence-informed policy (Perry et al., 2010). This then means that policy makers can target factors such as the 'quality of the evidence', the 'clarity of presentation', the 'nature of the message', etc., as specific reasons for not taking on board the findings of a given study.

In utilising the first two contextualising factors, Figure 2 also illustrates how the actual communicator of the research and, correspondingly, their position with regard to policy makers has as much a role to play in determining whether KA will occur, as the nature of the research (i.e., whether it relates to an idea currently favoured by policy makers). As such, unlike past models of KA, the model highlights the differences in complexity that accrue depending upon the situation at hand, rather than assuming equality in all situations. Accordingly, it is suggested that KA becomes easier when power is afforded to researchers (i.e., they are privileged and so have strong ties with policy makers) or power is afforded to the idea to which their research pertains. That four scenarios are presented also suggests that the situations researchers and policy makers will find themselves in can change. This reflects comments by Rickinson et al. (2011) who note that it may be considered simplistic to see the policy community as homogenous in terms of its likelihood to value or embrace evidence. Similarly, it can also be regarded as simplistic to assume that individual policy makers will treat all research as equally valid and so will adopt all findings, whether or not such research sits within the paradigms of the epistemologies and ideologies which are acceptable to policy makers.

Finally, the model is based on the premise that KA is relationship dependent and, as such, occurs at the level of the individual project/researcher/policy maker (i.e., it is individuals not organisations who adopt or produce research findings). At the same time, however, the complexity of the situation(s) affecting the KA process are determined by more macro factors, such as those existing at an organisational level or by factors which affect the nature of power relations between researchers and policy makers. As a result, this new model sets out the myriad of KA scenarios that researchers and policy makers will encounter, along with the specific issues that need to be considered and negotiated by them in order that KA can be successfully realised.

| Group/view point | Number |

| Politicians based in England and Wales | 2 |

| Civil servants based in England and Wales | 4 |

| Researchers considered from the literature, or self identified, as favoured by politicians or civil servants | 9 |

| Researchers considered from the literature, or self identified, as less favoured by politicians or civil servants | 6 |

| Academic researchers critical of the concept of evidence-informed policy | 4 |

| Academic researchers in favour of evidence-informed policy | 11 |

| Respondents belonging to think tanks, political advisors or those operating at the higher levels of Davies' (2006) policy-making 'food chain' | 3 |

| Total | 24 |

Following the interviews, abductive thematic analysis was employed to identify incipient thought relating to the model and its operation. Mason (2002, p.180) defines 'abductive'' analysis as a process through which "theory, data generation and data analysis are developed simultaneously in a dialectical [fashion]". Mason's (2002) approach thus accounts for the way in which themes and codes were derived from the interview data and how they enabled the augmentation of extant literature. Simultaneously, the validity of the findings was established. In particular, Lincoln and Gubba's (1985) technique of 'member-checking' was adopted; this ensured that the study's interpretations and conclusions were thoroughly tested with those who participated. In addition, interpretive rigour was also achieved through the use of verbatim quotations; an approach which accords with the request made by Fereday and Muir-Cochrane (2006) for transparent 'illustration'. It is argued that both the positive responses received from respondents after assessing the study's findings, combined with the direct reflections of the participants in the reporting of the analysis, add a further level of face validity to the analysis presented below.

You're judged by the quality of your previous work. If you've had the good ideas in the past, then people are going to come to you in the future. (Consultancy/think tank #1)Academics, too, affirmed that the reputation of a knowledge provider and the regard in which they are held, positively affect how policy makers receive their work. As a result, if one is considered reputable, the process of KA becomes simpler. For instance:

People are interested in hearing what I've got to say for two reasons; one - over a period of time I've generated a reputation for knowing a lot and being at the forefront of thinking about education... and two - I think this is important for policy makers-I've actually been there and done it... So those two things, plus my writing knowledge, do mean that Education Ministers or Prime Ministers are interested to speak to me. So, yes, absolutely! (Academic #2)All respondents also intimated, as suggested by the model, that the factors which need to be negotiated by researchers with strong ties to policy makers are fewer and less complex than those which need to be overcome by those who do not:

I suppose it's the same in any walk of life... you find somebody that you feel is aligned with you and you trust them, and therefore you don't need chapter and verse. (Academic #3)Again, these comments reflect findings from the initial literature review that 'privileged' or strongly tied researchers have more chance of influencing (or have less barriers to overcome in their attempts at influencing) policy makers than those who are not. Interview data also lent weight to the notion that the level of favour afforded to the evidence in question is vital to such knowledge being adopted. As one academic noted:

The topic has got to be pertinent... something that's very esoteric is unlikely to engage policy makers unless they are very, very unusual. (Academic #10)'In addition, in Brown (2011) the existence of strategies designed to negotiate the contextualising, internal and external factors which form Figure 2 are also identified. As a result, it is contended that KA can be facilitated by the employment of one, all, or any combination of four approaches. These have been described as; academics providing outputs which attempt to meet policy makers' and politicians' specific requirements from research ('policy-ready' strategies), researchers seeking to effectively communicate and/or use effective techniques or channels to promote their research ('promotional' strategies), academics engaging in 'traditional' academic behaviour ('traditional' strategies), academics attempting to shift their relative position with regard to the how 'privileged' they are by policy makers (which affects the ease with which they can access or influence them), or how policy makers perceive the policy context to which their research pertains ('contextual' strategies).

What's more, the use of different combinations of these strategies by respondents also indicates that KA can be regarded as a contextually specific social process. Respondents suggested and also behaved in ways to indicate that different approaches to KA are required in different circumstances. For example, this is illustrated in Brown (2011) by comparing the KA strategies employed by Barber and Mourshed in the production of their 2007 study, How the world's best..., to those used by Sylva et al. (2007) in promoting the Effective Pre-School and Primary Education 3-11 longitudinal study. Overall, then, not only did Figure 2 ring true with interviewees (i.e., it had face validity), but respondents also behaved in ways which suggest that its description of how KA might operate is representative of the social world more generally.

It is argued that the resulting model represents a clear and distinctive perspective from that provided by existing frameworks. In doing so, it can be argued that the model meets the requirements set out by Cooper and Levin (2010, p.15) who request that conceptualisations of research use "move past formulations such as 'research use is complex and multifaceted', to describe that complexity and its component elements so that these can be analysed and assessed". As a result, Figure 1 may be seen to move current understandings of research adoption to a point where "we can design and implement more effective interventions that target the areas that have the greatest potential to improve systems" (2010, p.15).

Finally, while the analysis and conclusions from this study relate directly to the sphere of educational research and to educational policy making, it may be possible to further generalise and to argue that my findings might also have implications for other policy sectors within England and Wales (such as health, justice, social care, etc.) or even for other policy jurisdictions. Given the lack of data to support any such assertions, I suggest that they may only stand as theoretical arguments, heavily laden with assumption and are therefore prime subject for future research effort.

| [1] | See: http://www.oise.utoronto.ca/rspe/KM_Products/Terminology/index.html |

| [2] | Empirical analysis by Landry et al. (2003) indicates that the drivers of research adoption put forward by the Two Communities Model do seem to provide effective indicators as to whether knowledge will be adopted by policymakers. Likewise the Interaction/Communication and Feedback Model successfully explain some of the key drivers involved in research adoption. Landry et al. suggest, however, that the determinants of research adoption, as postulated by The Organisational Interests Model, are mixed in terms of how well they predict whether research will successfully be adopted by policy makers and that factors postulated by the Engineering Model fail to effectively explain the processes involved in the successful adoption of research. |

| [3] | The report's presentation style maybe quickly ascertained via: http://mckinseyonsociety.com/how-the-worlds-best-performing-schools-come-out-on-top/ accessed 1 November 2011. |

Ball, S. (1995). Intellectuals or technicians? The urgent role of theory in educational studies. British Journal of Education Studies, 43(3), 255-271. http://www.jstor.org/stable/3121983

Ball, S. (2008) The education debate. Bristol: The Policy Press.

Ball, S. & Exley, S. (2010). Making policy with 'good ideas': Policy networks and the 'intellectuals' of New Labour. Journal of Education Policy, 25(2), 151-169. http://dx.doi.org/10.1080/02680930903486125

Barber, M. & Mourshed, M. (2007). How the world's best performing school systems come out on top. London: McKinsey and Company. http://mckinseyonsociety.com/how-the-worlds-best-performing-schools-come-out-on-top/

Best, A. & Holmes, B. (2010). Systems thinking, knowledge and action: Towards better models and methods. Evidence and Policy: A Journal of Research, Debate and Practice, 6(2), 145-159. http://dx.doi.org/10.1332/174426410X502284

Brown, A. & Dowling, P. (1998). Doing research/reading research: A mode of interrogation for education. London: Falmer Press.

Brown, C. (2009). Effective research communication and its role in the development of evidence-based policy making. A case study of the Training and Development Agency for Schools. MRes Dissertation, University of London, Institute of Education.

Brown C. (2011). What factors affect the adoption of research within educational policy making? How might a better understanding of these factors improve research adoption and aid the development of policy? DPhil Dissertation, University of Sussex.

Campbell, S., Benita, S., Coates, E., Davies, P. & Penn, G. (2007). Analysis for policy: Evidence-based policy in practice. London: HM Treasury.

Cohn, D. (2006). Jumping into the political fray: Academics and policy making. IRPP Policy Matters, 7(3), 1-31. http://www.irpp.org/pm/archive/pmvol7no3.pdf

Cooper, A. & Levin, B. (2010). Some Canadian contributions to understanding knowledge mobilization. Evidence and Policy: A Journal of Research, Debate and Practice, 6(3), 351-369. http://dx.doi.org/10.1332/174426410X524839

Cooper, A., Levin, B. & Campbell, C. (2009). The growing (but still limited) importance of evidence in education policy and practice. Journal of Educational Change, 10(2-3), 159-171. http://dx.doi.org/10.1007/s10833-009-9107-0

Council for Science and Technology (2008). How academia and government can work together. London: DIUS.

Court, J. & Young, T. (2003). Bridging research and policy: Insights from 50 case studies. London: ODI.

Davies, H., Nutley, S. & Smith, P. (2000). What works? Evidence-based policy and practice in public services. Bristol: The Policy Press.

Davies, P. (2004). Is evidence-based government possible? Jerry Lee lecture to Campbell Collaboration Colloquium, Washington DC 19 Feb 2004. http://www.sfi.dk/graphics/campbell/dokumenter/artikler/is_evidence-based_government_possible.pdf

Davies, P. (2006). Scoping the challenge: A systems approach. National Forum on Knowledge Transfer and Exchange, Toronto Canada, 23-24 October 2006. http://www.chsrf.ca/migrated/pdf/event_reports/philip_davies.ppt.pdf

Dowling, P. (2005). Treacherous departures. [accessed 14 Nov 2010, not found 18 Aug 2012]. http://homepage.mac.com/paulcdowling/ioe/publications/dowling2005/TreacherousDepartures.pdf

Dowling, P. (2007). Organizing the social. [accessed 14 Nov 2010, not found 18 Aug 2012]. http://homepage.mac.com/paulcdowling/ioe/publications/dowling2007.pdf

Dowling, P. (2008). Unit 4 Generating a research question. In Research and the Theoretical Field Lecture Pack and Reading Pack. London: University of London.

Dowling, P. (2008a). Mathematics, myth and method: The problem of alliteration. [accessed 14 Nov 2011; not found 18 Aug 2012]. http://homepage.mac.com/paulcdowling/ioe/publications/dowling2008a.pdf

Dunn, W. N. (1980). The two communities metaphor and models of knowledge use: An exploratory case survey. Science Communication, 1(4), 515-536. http://dx.doi.org/10.1177/107554708000100403

Estabrooks, C., Thompson, D., Lovely, J. & Hofmeyer, A., (2006). A guide to knowledge translation theory. The Journal of Continuing Education in the Health Professions, 26(1), 25-36. http://dx.doi.org/10.1002/chp.48

Exley, S. (2008). The politics of specialist school policy making in England. London: University of London.

Fereday, J. & Muir-Cochrane, E. (2006). Demonstrating rigor using thematic analysis: A hybrid approach of inductive and deductive coding and theme development. International Journal of Qualitative Methods, 5(1), 1-11.

Foucault, M. (1980). Power/knowledge: Selected interviews and other writings, 1972-1977. New York: Pantheon.

Gilchrist, A. (2000). The well-connected community: Networking to the 'edge of chaos'. Community Development Journal, 36(3), 264-75. http://dx.doi.org/10.1093/cdj/35.3.264

Gladwell, M. (2000). The tipping point: How little things can make a big difference. London: Little Brown.

Huberman, M. (1990). Linkage between researchers and practitioners: A qualitative study. American Educational Research Journal, 27(2), 363-391. http://dx.doi.org/10.3102/00028312027002363

Innvaer, S., Vist, G., Trommald, M. & Oxman, A. (2002). Health policy-makers perceptions of their use of evidence: A systematic review. Journal of Health Services Research & Policy, 7(4), 239-244. http://dx.doi.org/10.1258/135581902320432778

Kirst, M. (2000). Bridging education research and education policymaking. Oxford Review of Education, 26(3-4), 379-391. http://dx.doi.org/10.1080/713688533

Landry, R., Amara, N. & Lamari, M. (2003). The extent and determinants of utilization of university research in government agencies. Public Administration Review, 63(2), 192-205.

Lavis, J., Robertson, D., Woodside, J., McLeod, C. & Abelson, J., (2003). How can research organizations more effectively transfer research knowledge to decision makers? The Milbank Quarterly, 81(2), 221-248. http://dx.doi.org/10.1111/1468-0009.t01-1-00052

Lavis, J., Lomas, J., Hamid, M. & Sewankambo, N., (2006). Assessing country-level efforts to link research to action. Bulletin of the World Health Organization, 84, 620-628. http://www.who.int/bulletin/volumes/84/8/06-030312.pdf

Levin, B. (2004). Making research matter more. Education Policy Analysis Archives, 12(56), 1-20. http://epaa.asu.edu/ojs/article/view/211

Levin, B. (2008). Thinking about knowledge mobilization. Paper prepared for an invitational symposium sponsored by the Canadian Council on Learning and the Social Sciences and Humanities Research Council of Canada, 15-18 May, 2008.

Lincoln, Y. & Guba, E. (1985). Naturalistic inquiry. Newbury Park, CA: Sage.

Lindblom, C. & Cohen, D (1979). Usable knowledge: Social science and social problem solving. New Haven CT: Yale University Press.

Mason, J. (2002). Qualitative researching. London, Sage.

Mitton, C., Adair, C., McKenzie, E., Patten, S. & Waye-Perry, B. (2007). Knowledge transfer and exchange: Review and synthesis of the literature. The Milbank Quarterly, 85(4), 729-768. http://dx.doi.org/10.1111/j.1468-0009.2007.00506.x

Moore, G., Redman, S., Haines, M. & Todd, A. (2011). What works to increase the use of research in population health policy and programmes: A review. Evidence and Policy: A Journal of Research, Debate and Practice, 7(3), 277-305. http://dx.doi.org/10.1332/174426411X579199

Nutley, S.M., Davies, H.T.O. & Walter, I. (2002). Learning from the diffusion of innovations. Edinburgh, Research Unit for Research Utilization, University of St. Andrews.

Nutley, S.M., Walter, I. & Davies, H.T.O. (2007). Using evidence: How research can inform public services. Bristol: The Policy Press.

Nyden, P. & Wiewel, W. (1992). Collaborative research: Harnessing the tensions between researchers and practitioners. The American Sociologist, 23(4), 43-55.

Oancea, A. & Furlong, J. (2007). Expressions of excellence and the assessment of applied and practice-based research. Research Papers in Education, 22(2), 119-137. http://dx.doi.org/10.1080/02671520701296056

Oh, C. (1997). Explaining the impact of policy information on policy-making. Knowledge and Policy, 10(3), 25-55. http://dx.doi.org/10.1007/BF02912505

Oxman, A., Lavis, J., Lewin, S. & Fretheim, A. (2009). Support tools for evidence-informed health policymaking (STP) 1: What is evidence-informed policymaking? Health Research Policy and Systems. http://dx.doi.org/10.1186/1478-4505-7-S1-S1

Paisley, W. (1993). Knowledge utilization: The role of new communications technologies. Journal of the American Society for Information Science, May, 222-234.

Perry, A., Amadeo, C., Fletcher, M. & Walker, E. (2010). Instinct or reason: How education policy is made and how we might make it better. Reading: CfBT.

Rich, R. (1991). Knowledge creation, dissemination, and utilization. Knowledge: The International Journal of Knowledge Transfer and Utilization, 12(3), 319-337.

Rich, A. (2005). Think tanks, public policy and the politics of expertise. Cambridge: Cambridge University Press.

Rickinson, M., Sebba, J. & Edwards, A. (2011). Improving research through user engagement. London: Routledge.

Stronach, I. & MacLure, M. (1997). Educational research undone: The postmodern embrace. Buckingham, Open University Press.

Sylva, K., Taggart, B., Melhuish, E., Sammons, P. & Siraj-Blatchford, I. (2007). Changing models of research to inform educational policy. Research papers in Education, 22(2), 155-168. http://dx.doi.org/10.1080/02671520701296098

Watson, D., Townsley, R. & Abbott, D. (2002). Exploring multi-agency working in services to disabled children with complex healthcare needs and their families. Journal of Clinical Nursing, 11, 267-79.

Weiss, C. (1979). The many meanings of research utilisation. Public Administration Review, 29, 426-431.

Weiss, C. (1998). Have we learnt anything new about the use of evaluation? American Journal of Evaluation, 19(1), 12-13.

Wingens, M. (1990). Toward a general utilization theory: A systems theory reformulation of the two-communities metaphor. Science Communication, 12(1), 27-42. http://dx.doi.org/10.1177/107554709001200103

Yin, R.K. & Moore, G.B. (1988). Lessons on the utilisation of research from nine case study experiences in the natural hazards field. Knowledge, Technology & Policy, 1(3), 25-44. http://dx.doi.org/10.1007/BF02736981

| Author: Dr Chris Brown Chris completed his DPhil in 2011 (University of Sussex) and now works as a Researcher at the Institute of Education. Previous to this, Chris was Head of Research at the Training and Development Agency for Schools. Chris' research interests include evidence informed policy-making and the broader subject of knowledge adoption. Email: christopher.brown@mac.com Please cite as: Brown, C. (2012). Adoption by policy makers of knowledge from educational research: An alternative perspective. Issues In Educational Research, 22(2), 91-110. http://www.iier.org.au/iier22/brown.html |