|

Blended learning in higher education: Current and future challenges in surveying education

Ahmed El-Mowafy, Michael Kuhn and Tony Snow

Curtin University

The development of a blended learning approach to enhance surveying education is discussed. The need for this learning strategy is first investigated based on a major review of the surveying course, including analysis of its content, benchmarking with key national and international universities, and surveys of key stakeholders. Appropriate blended learning methods and tools that couple learning theory principles and developing technical skills are discussed including using learning management systems, flip teaching, collaborative learning, simulation based e-learning, and peer assessment. Two blended-learning tools developed for surveying units are presented as examples. The first is an online interactive virtual simulation tool for levelling, one of the key tasks in surveying. The second is an e-assessment digital marking, moderation and feedback module. Surveys of students showed that they found the interactive simulation tool contributes to improving their understanding of required tasks. Students also found the e-assessment tool helpful in improving their performance and in helping them to focus on the objectives of each activity. In addition, the use of peer e-assessment to improve student learning and as a diagnostic tool for tutors is demonstrated. The paper concludes with a discussion on developing generic skills through authentic learning in surveying education.

The rapid technology change can adversely result in a shift from higher education towards training (Burtch, 2005), i.e. while trying to keep up with new technology, more focus may be put on skill development rather than on learning theoretical principles. Therefore, a balance of the two components should always be maintained. To face this challenge, a blended learning approach, where learning education combines face to face classroom methods with computer-mediated activities (Strauss, 2012), can be used to combine technology with pedagogical principles for the benefit of student learning (Garrison & Kanuka, 2004; Hoic-Bozic et al. 2009).

This paper is an extension of El-Mowafy et al. (2013) and presents a blended teaching approach using surveying education as an example. In addition to classroom learning, it includes online learning and mobile learning. Blended learning encourages the gaining of knowledge coupled with traditional information-gained skills-development learning (authentic learning). Figure 1 shows an illustration of the components of blended learning and its target outcomes. Classroom learning is still considered the largest learning component.

Figure 1: Blended learning methodology

The paper discusses how blended learning methods have been applied to face some of the current and future challenges in the surveying education field. Firstly, the need for a blended learning approach in addressing rapid technology change in higher education is discussed, which came as a result of a recent review of the Bachelor of Surveying at Curtin University, Perth, Australia. This survey was originally performed to gauge learning and teaching efficiency, and evaluate content and use of new technologies in teaching. While the former has to satisfy the needs of stakeholders (e.g. the surveying profession), the latter has to address the problems of teaching a content-rich syllabus with limited resources as found in surveying education. The key outcomes and observations of this course survey are presented to show the need for blended learning. Next, the paper discusses some blended learning methods and tools. Examples are given on efficient inclusion of some of these methods and tools in surveying education, such as the simulation-based e-learning (SIMBEL), the use of e-assessment as a marking, moderation and feedback tool, and peer assessment. The paper concludes by providing an example on how authentic learning in surveying is used to develop generic practical skills. While the focus of this paper is on education in surveying, the authors believe that the methods outlined can be useful to other disciplines in applied sciences, such as engineering, agriculture, mining and physical education.

In surveying, the main aspects illustrated in Figure 2 have recently been addressed during a major course review of the Bachelor of Surveying offered by the Department of Spatial Sciences at Curtin University, Perth, Australia. It was concluded that a blended learning strategy was the appropriate approach to achieve the program goals of enhancing the learning process, developing generic and technical skills, and rectifying course structure problems identified. The main points from the review that supported these conclusions were:

Figure 2: General layout of a course review

The perceptions of the 11 course team members showed in general similar trends as the industry insights. As the analysis and benchmarking of the existing course resulted in no major knowledge gaps in this course review, the main focus was on assurance that knowledge is adequately covered. Current students and recent graduates were also asked to respond to questionnaires relating to their perception of the course and preparedness for the surveying industry. A key outcome was a confirmation that the inclusion of many practical survey exercises (about 25% of the course has a practical engagement) largely contributed to a high student satisfaction. In other words, students would appear to appreciate authentic or work-integrated learning.

Furthermore, all changes made were scrutinised from a holistic view of the total course, to ensure enhancement of student success and satisfaction. In addition, the definition of the new course structure included information and implementation of the 'surveying body of knowledge' (e.g. Greenfeld, 2008). Problems raised by the industry regarding a lack of generic skills have been addressed through curriculum mapping that ensured the syllabi of all units was updated and assessments were matched to meet the University's core graduate attributes (e.g. generic skills).

Central to e-learning approaches are learning management systems (LMS), such as Blackboard Academic Suite, that administer web-based learning activities (Garrison & Vaughan, 2008). Already common in many higher education institutions LMS are used to assist in the delivery and management of learning-related material, such as course notes, lecture recordings, e-assessments, and discussion forums, etc. Like other web-based technologies, the advantage of LMS is their continuous availability from any location given access to the Internet. LMS can be used for both the delivery of fully online courses as well as the enhancement of traditional face to face classroom teaching.

Commonly based on written material and videos is the concept of flip teaching. This approach of blended learning replaces the traditional face to face classroom lectures. It is a form of active and collaborative learning (Silberman, 1996; Prince, 2004). In flipped teaching, students are provided with learning material (e.g. course notes and videos of lectures) to prepare themselves for the classroom and/or practical activities. Instead of traditional passive teaching in the classroom, teachers can focus more on specific questions and/or problems raised by tutors and students that promote or reinforce the targeted subjects' outcomes. The concept of flip teaching has been trialled successfully in some surveying units (e.g. GPS Surveying). Here students are actively involved in addressing questions, debating and finding solutions to problems that address the desired learning outcomes.

According to the Assessment and teaching of 21st century skills (Griffin, McGaw & Care, 2012), collaborative learning is an important skill in the 21st century. It directly addresses some of the generic skills such as problem solving, critical thinking and communication. While collaborative learning is not a new concept, it recently gained a new dimension with computer-assisted methodologies such as the use of Web 2.0 technology, LMS, and social media. While encouraging teamwork in collaborative learning students benefit from an active exchange of knowledge and ideas as well as having the possibility to monitor one another's work. Today this process is becoming more computer-assisted and so allows collaboration to take place without any face to face contact. This seems to fit the more mobile nature of today's students, where they can fully contribute, at any time and from any location. This now means that social media is becoming of particular importance in facilitating the exchange of user-generated content and online discussions. As surveying exercises typically involve group work activities, collaborative learning is essential in a number of units within the course.

Video technology can be used as an educational tool for the development and documentation of practical skills (e.g. Frehner et al. 2012). Video analysis is commonly used in sports coaching and education, and professional development of teachers (e.g. Rich & Hannafin 2009). In surveying education, video analysis can be used in two ways, for the demonstration of typical practical procedures, and the recording of student's practical performance.

By providing authentic-like recordings, instructors do not need to spend significant proportions of their time explaining routine procedures, but can focus more on specific problems. In addition, students can follow the video instructions at their own time and pace. Video recording of students' practical performance can be a powerful tool by allowing self-analysis and reflective practice. Furthermore, video evidence can be taken for assessment purposes. An analysis of emerging video annotation tools is provided by Rich & Hannafin (2009).

Simulation-based e-learning (SIMBEL, Kindley 2002) provides a great potential to develop practical skills in a virtual environment. The student is able to learn practical skills required at a given workplace through simulation via real-world scenarios. SIMBEL also provides the opportunity for students to engage, experiment and reflect. According to Slotte & Herbert (2008), the experimental nature is of great importance in allowing students to study cause-and-effect relationships. In addition, SIMBEL is of great importance for training with fragile and/or expensive instruments or training for work in a hazardous environment. As this is also the case in surveying education, SIMBEL can be an effective tool as shown by one example in the following section. Using SIMBEL, students will be prepared for specific work routines without the need for face to face instructions. The saved time can be used by lecturers and tutors to assist students with more specific problems.

In surveying, students typically exercise each practical skill in just one session. As a result, their practical experience is limited to conclusions derived from their own work. One efficient way of improving students' experience is by involving them in peer assessment of other group's work (Falchikov, 2005, 2007). In addition, peers work closely together and may therefore have a greater number of accurate behavioural observations of each other (DeNisi et al., 1983; Greguras et al., 2001).

Based on a teacher's grading scheme (e.g. rubrics), students grade their own or one another's work. While marking, students can learn from their own or another's mistakes and recognise their own strengths and weaknesses. In addition, teachers or tutors can save time in this grading process as the grading is done simultaneously for the whole group. As demonstrated in the following section, this type of evaluation can be assisted by electronic assessment (e-assessment) technologies that are able to automatically mark and provide feedback (Crisp, 2007). The potential of peer and self-assessment to enhance student learning in the surveying fieldwork was investigated over a period of two years in the unit "GPS Surveying". According to feedback received from participating students, they found peer assessment to be an efficient active learning tool useful for formative assessment and helped them to address the learning objectives of the fieldwork.

Assessment is focused on improving the learning process by examining the adopted strategies with the goal of enhancing them (Bloxham & Boyd, 2007). It has a focus on future learning that reportedly improves both short and long-term outcomes by helping students to make increasingly sophisticated judgments about their learning (Thomas et al., 2011; Boud & Falchikov, 2007). While e-assessments are particularly suited to assess cognitive skills (e.g. memory), e-portfolios can be used to assess practical skills, the main component in surveying education. E-portfolios are becoming more popular in assessing the proficiency of a student on either a particular practical skill or in a general field. This is done by the collection of electronic evidence (e.g. computer assisted) that documents the proficiency. Evidence can be of various types such as written reports, diaries, pictures, audio, video, multimedia, hyperlinks, etc. While being a collection of evidence, an e-portfolio can also help develop communication skills as a result of the assembly of all evidence and presentation of the student's work.

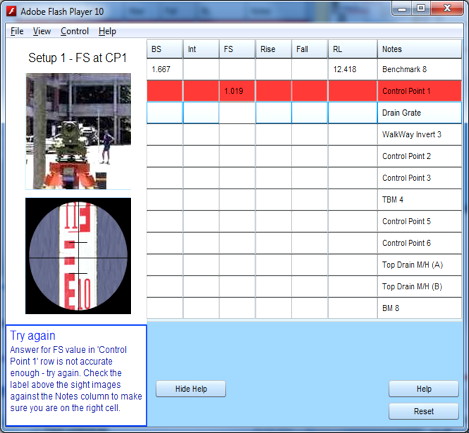

The interface simulation module is split into three parts. The first part is to practise reading of the levelling staff using the level instrument, the key field observation component. The second part of the interface is the computations associated with the fieldwork observations. The third component is the checking procedures used by surveyors to ensure both the fieldwork and calculations are correct. Figure 3 shows the interface of the online simulation for levelling during its use (Figure 3a) and after it has been carried out (Figure 3b). The simulation tool has been tested and used in the basic surveying unit "Plane and Construction Surveying 100" (PCS100), offered by the Department of Spatial Sciences, Curtin University, as well as other service units in basic surveying.

Figure 3a: Interface of the simulation tool during its use

Figure 3b: Interface of the simulation tool after computations

The feedback from students who have used the virtual online simulation tool has been positive in terms of the modules' usefulness in developing their understanding and ability to carry out the levelling field exercise. In 2011, 42 students studying the unit "Plane and Construction Surveying" responded to a questionnaire regarding their experiences using the virtual levelling simulation tool. Students found the interactive simulation tool most useful with comments showing that it was used successfully to practise skills both before and after the field exercise with real-world equipment. The basic questions asked in the questionnaire were:

| Number of participating students | Agree that the tool improves their accuracy | Agree that the tool improves understanding | Time spent using the simulation module | |||

| < 5 min | 5-15 min | 15-30 min | 30-60 min | |||

| 42 | 90.5% | 92.8% | 9.5% | 40.4% | 42.9% | 7.2% |

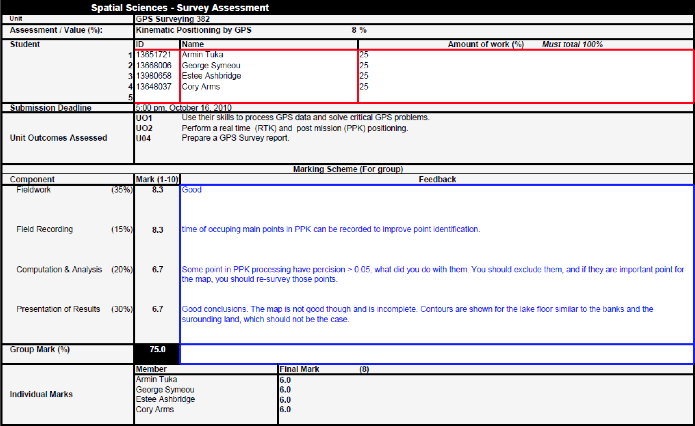

The templates (rubrics) have been incorporated for three years into four surveying units at Curtin University (Plane and Construction Surveying, Engineering Surveying, Mine Surveying and Mapping, and GPS Surveying). Tutors for each of these units use a digital copy of the rubrics. The marking feedback e-tools were designed with two sheets. The first provides marks according to performance level in sub-tasks in each fieldwork activity. Four main assessment components were identified for use in the rubrics: fieldwork, field recording, computation and analysis, and presentation of results. The first two components are related to activities performed in the field, whilst the last two components are carried out in the office environment after data collection and verification. These four areas are further broken down into four subcategories that are individually assessed. Each assessment criterion is quantified and varied according to each task/laboratory. The activities for each task have been described and linked to different performance levels that are set to meet the common industry standards for fieldwork execution. A marking scale is linked to each performance category level and the final mark for the assignment is derived from each category level box selected by the marker. Figure 4 illustrates an example of the first sheet of the marking and feedback e-tool.

Figure 4: Sheet 1 - Marking based on well-defined performance level in each task

Preparation of the templates in a digital format has served to streamline their use in the calculation of marks and the statistical analysis of results. In addition, the templates are used as a tool to provide specific feedback to students for each fieldwork activity. The second sheet of the marking and feedback tool presents the assessment outcome where a calculator tool is applied automatically and assigns marks to each student according to performance of each activity scored in the first sheet and percentage of student's contribution. Figure 5 illustrates an example of the calculation sheet component of the rubric. The developed system is designed to provide an accurate, fair and consistent moderation approach that narrows down variability in moderation of fieldwork between different assessors, as they use the same marking scale for different tasks according to a well-defined performance in each activity.

The testing of the first version of the group assignment marking tool has showed that it provided a very useful tool in helping students to both focus on the objectives of each activity and match effort and achievement to the assigned marks. A survey was conducted with students who had used the marking rubric to obtain feedback regarding its usage and value to their understanding of the practical work requirements. The students' feedback showed that they found the marking rubric helpful in assisting their understanding of practical task requirements and in improving their performance and response to marking outcomes (Gulland et al., 2012b). In Curtin's University's online survey system for gathering and reporting student feedback on their learning experiences (eVALuate), student satisfaction in the surveying area has risen compared to previous years before the implementation of the marking/feedback tool by 5% on average. The response of the industry received through another questionnaire was encouraging and provided valuable comments and recommendations. These will be taken into consideration in the development of an improved version of the rubrics.

Figure 5: Digital calculator tool and feedback of the rubrics

Table 2 shows the absolute mean and dispersion (measured by the standard deviation) of differences in marks between peer and tutors' marks for the tested field sessions. Mean and standard deviation were computed as percentages from the total mark for the four subcategories for each of the four identified main assessment components (see Figure 4), namely fieldwork, field recording, computation and analysis, and presentation of results. We also empirically checked that the marks included in computation of mean and standard deviation do not include outliers, i.e. the differences between marks given for each fieldwork component from its mean did not exceed three times the value of the standard deviation computed from the overall marks given to this component.

| Component/score | mean % | std. dev. % |

| Fieldwork | 6.60 | 3.71 |

| Field recording | 6.41 | 4.68 |

| Computation and analysis | 7.37 | 1.79 |

| Presentation of results | 6.92 | 4.52 |

Results in Table 2 show that differences in marks of peer assessors with those of the tutors for the first two components, fieldwork and field recording, which are carried out in the field, were marginally better than results of the two office work components, data analysis and presentation of results. This can be explained by differences in the width and depth of the experience between students and tutors when assessing skills, structure and presentation of results. This can be verified when considering that the components that have the largest differences in the office work tasks were acceptable results achieved, clear and well-structured report elements and required plans/ maps/ tables with average differences of 10%, 9%, and 8% respectively. For the fieldwork components, the subcategories that experience the highest differences were closing/checking observations taken before leaving site, and clear and complete field notes presented where discrepancies between tutors and peer assessors were 9% and 11% respectively.

These differences indicated to the tutors that more explanation was needed of the assessment criteria and their expectations for the components that have such large differences between peer assessors and the tutors. Therefore, the peer-assessment experiment was a good tool to improve our teaching approach and to identify the activities that required improvements. In addition, based on discussions with students and tracking changes in their reports before and after the application of peer assessment, it is apparent that peer assessment helps students to improve their approaches to problem solving by learning from the mistakes and innovations of others, provision of constructive criticism to peers, following key learning objectives, and appreciating the importance of coming to the final correct solution.

Each practical surveying exercise can be defined as a problem that has to be solved. This means that students have to apply their theoretical knowledge in order to apply appropriate practical operations. This process requires problem solving and critical thinking skills (e.g. design the practical operation so as to ensure an optimal outcome) as well as develop practical surveying skills. Communication skills are also necessary which are developed through the design of tasks that require teamwork as well as the analysis and presentation of results.

A common practical exercise in surveying is designed to include the following tasks:

Some examples were presented of blended learning in surveying education and information on some key concepts in blended learning. Both provide some insight into blended learning that is likely to become the standard in education in the coming years. In fact, many higher education institutions are already in a transition from traditional classroom teaching to some form of blended learning, by increasing the use of e-learning and e-assessment components.

Surveying education in particular, and education in applied science disciplines in general, heavily rely on authentic learning in order to develop generic, technical and practical skills. In this regard, we have shown that SIMBEL provides a great potential to develop practical skills in a virtual environment. In cases of shortage of time and resources, SIMBEL can provide a high-quality alternative to face to face training. We have provided an example on how SIMBEL was included into surveying education and got an overwhelming agreement from students that the employed tool was helpful in improving their skills and knowledge. Therefore, we believe that SIMBEL should be a key element in any form of authentic learning.

The clear definition of the fieldwork tasks and their marking scales associated with different performance levels in the form of e-assessment templates (e.g. rubrics) can help students improve their performance in the practical labs. The use of structured grading schemes and moderation of marking is vital to stimulate and guide the student's efforts in addressing all fieldwork tasks and their objectives. Preparation of the templates in a digital format has served to streamline their use in the calculation of marks and the statistical analysis of results. In addition, the templates can be efficiently used as a tool to provide specific feedback to students for each activity. Finally, peer-assessment was experimented in surveying fieldwork, and it was found to be a good tool to identify the activities that require improving tutors' explanation as well as to help students gain more experience.

Boud, D. & Falchikov, N. (2007). Developing assessment for informing judgement. In D. Boud & N. Falchikov (Eds.), Rethinking assessment in higher education: Learning for the longer term (pp. 181-197). London: Routledge.

Brennan, R. (2001). An essay on the history and future of reliability from the perspective of replications. Journal of Educational Measurement, 38(4), 295-317. http://dx.doi.org/10.1111/j.1745-3984.2001.tb01129.x

Burtch, R. (2005). Surveying education and technology: Who's zooming who? Surveying and Land Information Science, 65(3), 135-143.

Cho, K., Schunn, C. & Wilson, R. (2006). Validity and reliability of scaffolded peer assessment of writing from instructor and student perspectives. Journal of Educational Psychology, 98(4), 891-901. http://psycnet.apa.org/doi/10.1037/0022-0663.98.4.891 [also at http://wac.sfsu.edu/sites/default/files/cho_peerassessment.pdf]

Crisp, G. (2007). The e-assessment handbook. London: Continuum.

DeNisi, A. S., Randolph, W. A. & Blencoe, A. G. (1983). Potential problems with peer ratings. Academy of Management Journal, 26(3), 457-464. http://www.jstor.org/stable/256256

El-Mowafy, A., Kuhn, M. & Snow, T. (2013). A blended learning approach in higher education: A case study from surveying education. In Design, develop, evaluate: The core of the learning environment. Proceedings of the 22nd Annual Teaching Learning Forum, 7-8 February 2013. Perth: Murdoch University. http://ctl.curtin.edu.au/professional_development/conferences/tlf/tlf2013/refereed/el-mowafy.html

Falchikov, N. (2005). Improving assessment through student involvement: Practical solutions for aiding learning in higher and further education. New York: Routledge Falmer.

Falchikov, N. (2007). The place of peers in learning and assessment. In D. Boud & N. Falchikov (Eds.), Rethinking assessment in higher education: Learning for the longer term (pp. 128-143). London: Routledge.

Frehner, E., Tulloch, A. & Glaister, K. (2012). "Mirror, mirror on the wall": The power of video feedback to enable students to prepare for clinical practice. In A. Herrington, J. Schrape & K. Singh (Eds.), Engaging students with learning technologies. Curtin University. http://espace.library.curtin.edu.au/R?func=dbin-jump-full&local_base=gen01-era02&object_id=189434

Garrison, D. R. (2011). E-learning in the 21st century: A framework for research and practice. Book News, Inc., Portland, USA.

Garrison, D. R. & Vaughan, N. D. (2008). Blended learning in higher education. San Francisco: Jossey-Bass.

Garrison, D. R. & Kanuka, H. (2004). Blended learning: Uncovering its transformative potential in higher education. The Internet and Higher Education, 7(2), 95-105. http://dx.doi.org/10.1016/j.iheduc. 2004.02.001

Greenfeld, J. (2011). Surveying body of knowledge. Surveying and Land Information Science, 71(3-4), 105-113. http://www.ingentaconnect.com/content/nsps/salis/2011/ 00000071/F0020003/art00002

Greenfeld, J. & Potts, L. (2008). Surveying body of knowledge - preparing professional surveyors for the 21st century. Surveying and Land Information Science, 68(3), 133-143. http://www.ingentaconnect.com/content/nsps/salis/2008/00000068/00000003/art00003

Greguras, G. J., Robie, C. & Born, M. P. (2001). Applying the social relations model to self and peer evaluations. The Journal of Management Development, 20(6), 508-525. [viewed 3 March 2013]. http://www.emeraldinsight. com/journals.htm/journals.htm?issn=0262-711&volume=20&issue=6&articleid=880450&show=html

Griffin, P., McGaw, B. & Care, E. (2012). Assessment and teaching of 21st century skills. Dordrecht: Springer.

Gulland, E. K., El-Mowafy, A. & Snow, T. (2012a). Developing interactive tools to augment traditional teaching and learning in land surveying. In Creating an inclusive learning environment: Engagement, equity, and retention. Proceedings of the 21st Annual Teaching Learning Forum, 2-3 February 2012. Perth: Murdoch University. http://ctl.curtin.edu.au/professional_development/conferences/tlf/tlf2012/abstracts.html#gulland1

Gulland, E. K., El-Mowafy, A. & Snow, T. (2012b). Marking moderation in land surveying units. In Creating an inclusive learning environment: Engagement, equity, and retention. Proceedings of the 21st Annual Teaching Learning Forum, 2-3 February 2012. Perth: Murdoch University. http://ctl.curtin.edu.au/professional_development/conferences/tlf/tlf2012/abstracts.html#gulland2

Herrington, A., Schrape, J. & Singh, K. (Eds) (2012). Engaging students with learning technologies. Perth: Curtin University. http://espace.library.curtin.edu.au/R/?func=dbin-jump-full&object_id =187303&local_base=GEN01-ERA02

Hoic-Bozic, N., Mornar, V. & Boticki, I. (2009). A blended learning approach to course design and implementation. IEEE Transactions on Education, 52(1), 19-30. http://dx.doi.org/10.1109/TE.2007.914945

Kindley, R. (2002). The power of simulation-based e-learning (SIMBEL). The eLearning Developers' Journal, 12 September. [viewed 27 Sep 2012]. http://library.marketplace6.com/papers/pdfs/Kindley-Sept--17-2002.pdf

Oliver, B., Hunt, L., Jones, S., Pearce, A., Hammer, S., Jones., S. & Whelan, B. (2010). The graduate employability indicators: Capturing broader stakeholder perspectives on the achievement and importance of employability attributes. In Quality in uncertain times. Proceedings of the Australian Quality Forum 2010, 30 June - 2 July, 2010, Gold Coast, Australia, p. 89-95. http://eprints.usq.edu.au/8273/

Oliver, B. (2011). Good practice report: Assuring graduate outcomes. Australian Learning and Teaching Council, Strawberry Hills, NSW, Australia, pp. 49. http://www.olt.gov.au/resource-assuring-graduate-outcomes-curtin-2011

Prince, M. (2004). Does active learning work? A review of the research. Journal of Engineering Education, 93(3), 223-232. http://www.jee.org/2004/july/800.pdf

Rich, P. J. & Hannafin, M. (2009). Video annotation tools: Technologies to scaffold, structure, and transform teacher reflection. Journal of Teacher Education, 60(1), 52-67. http://dx.doi.org/10.1177/ 0022487108328486

Silberman, M. (1996). Active learning: 101 strategies to teach any subject. Boston: Allyn & Bacon.

Slotte, V. & Herbert, A. (2008). Engaging workers in simulation-based e-learning. Journal of Workplace Learning, 20(3), 165-180. http://dx.doi.org/10.1108/13665620810860477

Strauss, V. (2012). Three fears about blended learning. The Washington Post, 22 Sept. http://www.washingtonpost.com/blogs/answer-sheet/post/three-fears-about-blended-learning/2012/09/22/ 56af57cc-035d-11e2-91e7-2962c74e7738_blog.html

Strobl, J. (2007). Geographic learning. Geoconnexion International Magazine, 6(5). 46-47. http://www.geoconnexion.com/publications/geo-international/

Thomas, G., Martin, D. & Pleasants, K. (2011). Using self- and peer-assessment to enhance students' future-learning in higher education. Journal of University Teaching & Learning Practice, 8(1), 1-17. http://ro.uow.edu.au/cgi/viewcontent.cgi?article=1112&context=jutlp

The articles in this Special issue, Teaching and learning in higher education: Western Australia's TL Forum, were invited from the peer-reviewed full papers accepted for the Forum, and were subjected to a further peer review process conducted by the Editorial Subcommittee for the Special issue. Authors accepted for the Special issue were given options to make minor or major revisions (major additions in the case of El-Mowafy, Kuhn and Snow). The reference for the Forum version of their article is:

El-Mowafy, A., Kuhn, M. & Snow, T. (2013). A blended learning approach in higher education: A case study from surveying education. In Design, develop, evaluate: The core of the learning environment. Proceedings of the 22nd Annual Teaching Learning Forum, 7-8 February 2013. Perth: Murdoch University. http://ctl.curtin.edu.au/professional_development/conferences/tlf/tlf2013/refereed/el-mowafy.htmlAuthors: Dr Ahmed El-Mowafy is a Senior Lecturer, Department of Spatial Sciences, Curtin University. He has more than 20 years of teaching and research experience in the area of surveying and satellite positioning with an extensive publishing record. He is the recipient of a Curtin University 2012 'Excellence & Innovation in Teaching Award' (Physical sciences and related studies). Email: a.el-mowafy@curtin.edu.au Michael Kuhn is an Associate Professor, Department of Spatial Sciences, Curtin University. Since 1999 he has been research-active in the areas of physical geodesy, geophysics and oceanography, having published more than 55 peer-reviewed journal articles. Michael is a Fellow of the International Association of Geodesy and recipient of an Australian Research Council Postdoctoral Fellowship. Email: m.kuhn@curtin.edu.au Tony Snow is a Senior Lecturer in the Department of Spatial Sciences and has been teaching at Curtin University for over 24 years. Tony is both a Licensed Surveyor and Authorised Mine Surveyor, in WA. Tony was part of a team that received the 'Curtin Excellence and Innovation in Teaching Award' for the development of the levelling simulation tool. Email: t.snow@curtin.edu.au Please cite as: El-Mowafy, A., Kuhn, M. & Snow, T. (2013). Blended learning in higher education: Current and future challenges in surveying education. In Special issue: Teaching and learning in higher education: Western Australia's TL Forum. Issues in Educational Research, 23(2), 132-150. http://www.iier.org.au/iier23/el-mowafy.html |